Author: Luke Pirtle, Director of IP Development

Overview

Performance inside NetSuite is usually done incorrectly due to misunderstandings on where to optimize, what to optimize, how to optimize it, and what NOT to optimize.

There are numerous statements and definitions of good code all over the internet written by various people with varying credentials. Most definitions bear a resemblance to the following.

The code must be:

• Readable

• Scalable

• Testable

• Fail Gracefully

• Easily Extendable

• Reusable

The biggest takeaway from this list is that performance is toward the end of the list. A few will even mention avoiding premature optimization, stating it’s harmful. It cannot be overstated that quality code is not the fastest-running code; rather, quality code follows the tenets above. If a solution is 10x faster but has intermittent errors and doesn’t indicate when it fails, you’ll end up with a client who is upset because their integration has been turned off for a month without any notice.

Performance should be considered but only after the other tenets are addressed. Typically, it’s also an afterthought and should only be done if requested. Rarely is there an issue with a solution being “too slow,” so there is no reason to risk the possibility of a bug or client escalation due to an unsolicited update. Before optimizing, always write good quality code.

Optimization: Breakout and Isolate

What needs to be optimized is commonly misunderstood. The first step in optimization is breakout and benchmarking to identify what needs to be prioritized. Developers typically want to create cool, clever solutions and use this as an excuse to theorize what needs an upgrade instead of investigating further. It can lead to severely overcomplicated data structures and algorithms because it’s fun, versus doing the legwork that will produce tangible results.

For example, I once had a solution that took mere seconds to commit a line on a sales order but was a painful UX experience. I optimized it with bulk searches and async calls and made it as streamlined as possible. Then, I discovered that my solution had never taken more than 0.1 – 0.2 seconds to run on average. The slowdown was due to the absurd number (over 250) of custom fields that were on the transaction line with over 25% being list / record fields. The system needed to work with those extra columns and references and that was taking 95% of the time needed to commit.

I had only shaved off tenths of a second at most and spent a week or two in the weeds. Testing the same pre-optimized code base on a standard form without these fields resulted in instantaneous performance. Without my investigation, the solution could never have taken less than the 2 seconds I started with. I was fighting an unwinnable battle. I only did this analysis at the end when I ran out of ideas on how to increase performance further. You need to start with the low hanging fruit and work your way up the tree. There are times you’ll need to climb all the way up to the top, but typically you can make quick and easy changes to optimize solutions to an acceptable range.

What to Optimize

HTTPS Requests and Network Calls

Computers are fast and have been for a while. For a frame of reference—CPUs are rated in GHz which is measured as instructions/seconds. This means a 1 gigahertz (GHz) computer completes a billion clock cycles per second. However, HTTPS have an actual physical distance to travel and when you make a request it drastically slows things down.

Numerous strategies and caches are used by ISPs and data engineers to mitigate the number of calls and distance required for calls, but there is always a physical distance slowdown. HTTPS calls usually take 1.5 seconds on average and lines of code execute in less than a millisecond. HTTPS calls should be consolidated and minimized. It is far “cheaper” and more efficient to do client-side computations rather than multiple HTTPS calls. Bulk operations should be performed as well. For a simple analogue, consider going to the grocery store several times throughout the day for each ingredient versus going with a list and getting it all at once.

Optimization Tips:

• Try to consolidate multiple calls to external resources.

• Use Async calls, when possible, to unblock the CPU while these are working.

• Avoid unnecessary calls by removing any relics from your code.

• Use caching methodology and keep local copies of values and files when possible and only look them up when necessary.

API Calls

Most API calls in NetSuite initiate a request to the server, perform a database operation, or utilize some internal resource on their network. These requests are optimized and travel shorter distances but have the same detriments as the HTTPS calls mentioned above. Every search, query, record load, file saved, etc. makes a call to a server or database and should be minimized or consolidated in bulk.

Optimization Tips:

• Don’t use database operations unless you need them. Record.load should only be used if you are going to write to the record or get something only available by loading a record. Use the search module instead.

• Prioritize bulk searches. If you have to look up a value for each line on a transaction, identify all elements up front and run a search to get them all at once.

• Remove debugging logs from scripts once you have a working solution. Rather than removing all logs, keep the logs informative and useful by removing the “Got here” and spot check variable logs.

• Modify log levels when not debugging. Turn a script from “debug” to “audit” or “error” to prevent log noise and increase performance.

CSV

CSV import is a robust, simple, and very performant operation for updating and creating records in NetSuite. You can multithread imports, have them run on multiple queues, and disable scripts and workflows if you don’t need them. They can be manually used by non-functional resources but also triggered from server-side scripts for complex automations. You don’t have as much control over the logic as you do with a script, but it’s usually not required for bulk operations or can be done beforehand.

Optimization Tips:

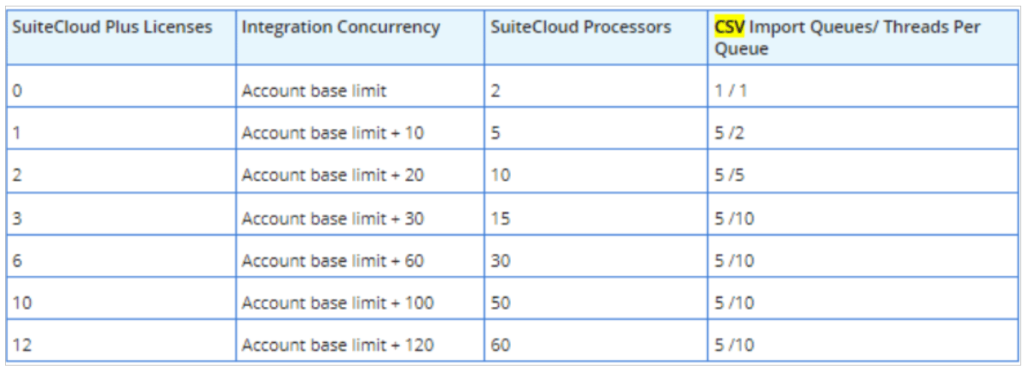

- SuiteCloud + Licenses are key. If you have 0 licenses, you have 1 thread and 1 queue for the entire account. If you have 1 license, you have 2 threads and 5 queues which is roughly 10x the speed. SuiteCloud + licenses can be prohibitively costly, so design after you take this into consideration.

- Use CSVs whenever possible. They are easy to setup, faster to execute than suite script, and provide built-in error handling. Developers tend to always favor scripts but the performance and simplicity of CSVs can’t be denied. Use the best tool for the job.

Map / Reduce for Multithreading

The Map/Reduce design pattern is powerful. However, developers usually just need a multithreading option and skip the map or reduce stage. Typically, the biggest slowdown / process intensive tasks are running a search, saving records, and network requests. Multithreading these elements scales down the highest latency code in your script, saving you precious time.

Optimization Tips:

• SuiteCloud + Licenses are key. If you have 0 licenses, you have 2 SuiteCloud Processors for the entire account. If you have 1 license, you have 5 threads with 5 time the speed. SuiteCloud + licenses can be prohibitively costly so design after you take this into consideration.

• Prefetch static values (meta data for queues)

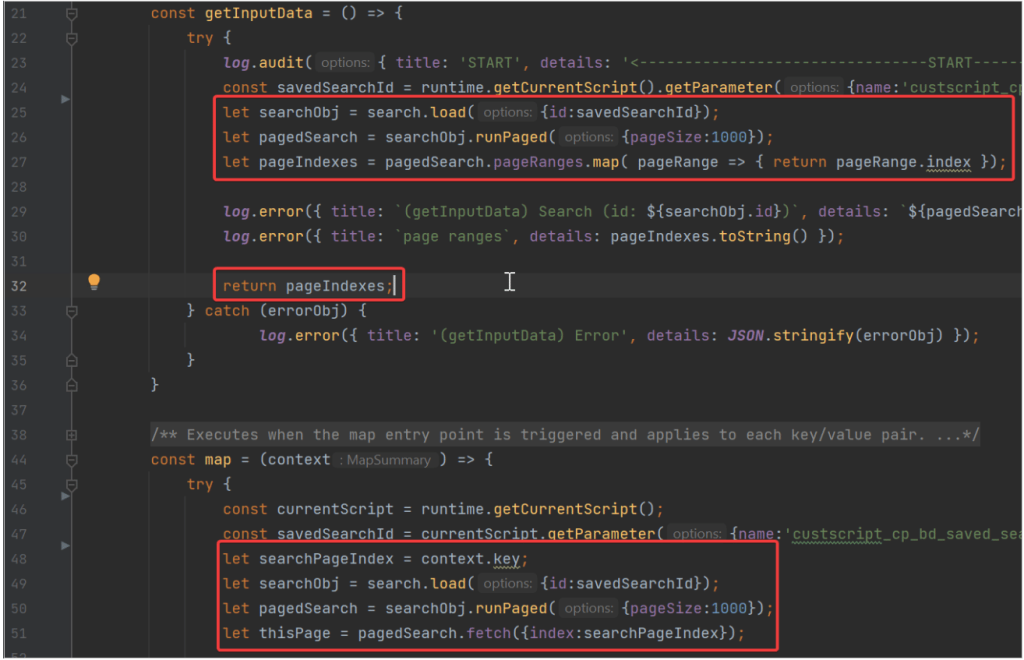

• Break out searches if they are large and complicated. If possible, chunk out search pages to the map stage and run them there to multithread your search. This also prevents timeouts for large or complex searches.

- Break out API calls into separate threads to multithread the most impacting part of development.

What NOT to Optimize

Anything at the JavaScript level, you do not need to optimize. You should not be trying to augment NetSuite code like Google or Apple. I can imagine the developers who use binary trees and layers of typescript screaming right now, but if you use binary trees in JavaScript and with NetSuite you are doing it wrong. There is no room for preference or subjectivity. This is the truth specifically for the NetSuite ecosystem due to its size and typical use cases. NetSuite’s core demographic is small to medium sized businesses and an enterprise level client here and there. This business model and demographic does NOT benefit from micro optimizations that can save milliseconds over millions of computations by leveraging advanced data structures and algorithms. If you are struggling that much you are on the wrong system or need to simplify your process.

- Google developers deal with billions of search results, while NetSuite searches are capped at 10,000 data results.

- Google deals with C++, Java, and Python. NetSuite uses a Java Virtual Machine to run JavaScript on an ECMA standard.

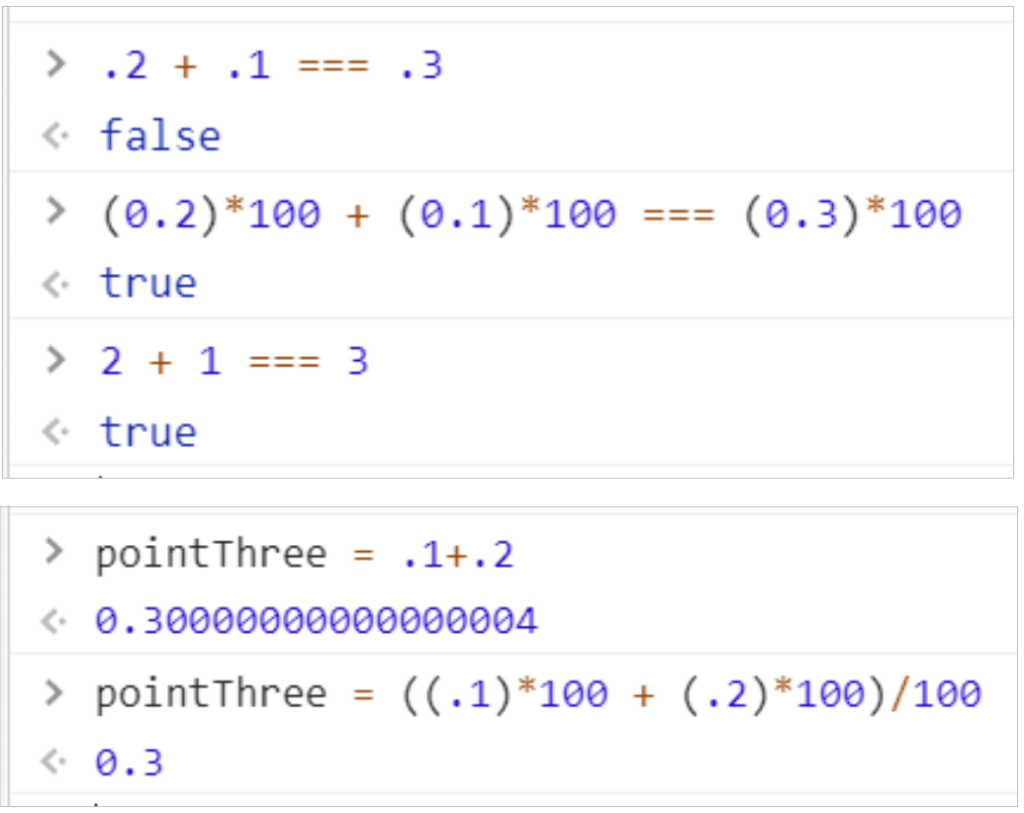

- Micro optimizations are usually dependent on low-level understanding of processors, registries, and datatypes. JavaScript can’t do any of that because it only has one datatype for numbers and its “float.” JavaScript also can’t add correctly, which is why you will see gems like the screenshot below:

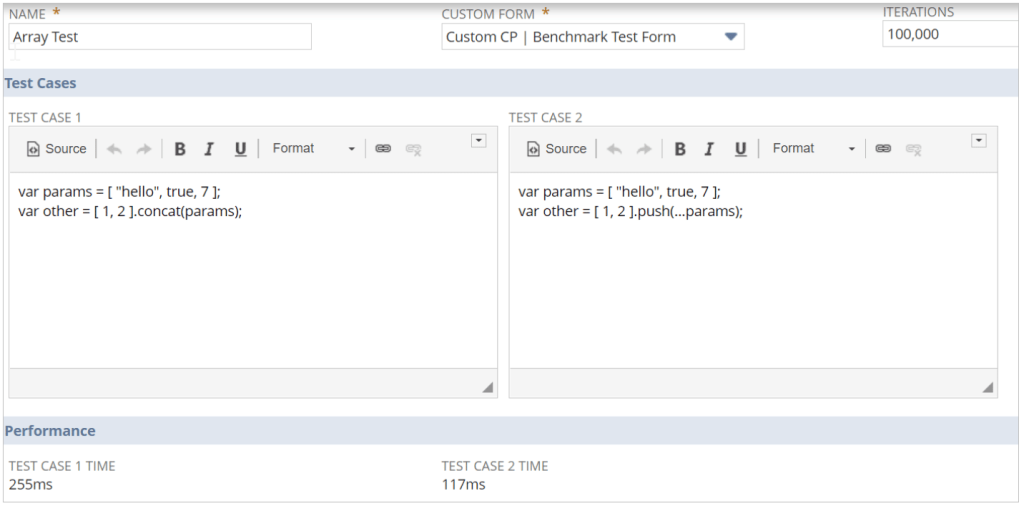

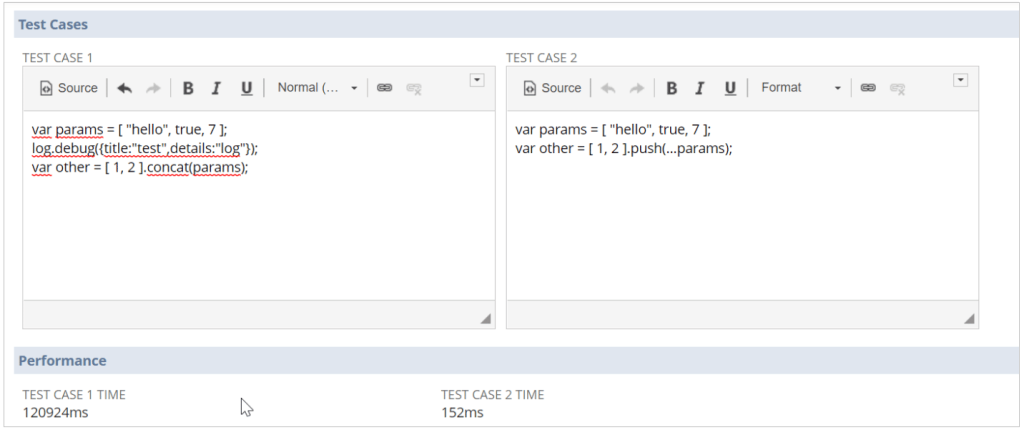

Typically, the overhead from advanced data structures and algorithms isn’t even realized until you get to large datasets that exceed the usual 10,000 elements. In JavaScript, sometimes this never happens. It’s actually harmful in the NetSuite ecosystem by adding extra overhead, test cases, and complexity, and it provides no benefit. Take a look at the example below using a benchmarking tool I created:

I wanted to know which array operation was faster in NetSuite. I had a Suitelet run both examples 100,000 times each and received the following results: 255ms vs 117ms.

I was able to run an entire benchmark of 100,000 iterations for both unit tests in significantly less time than a single average HTTPS request (1500ms).

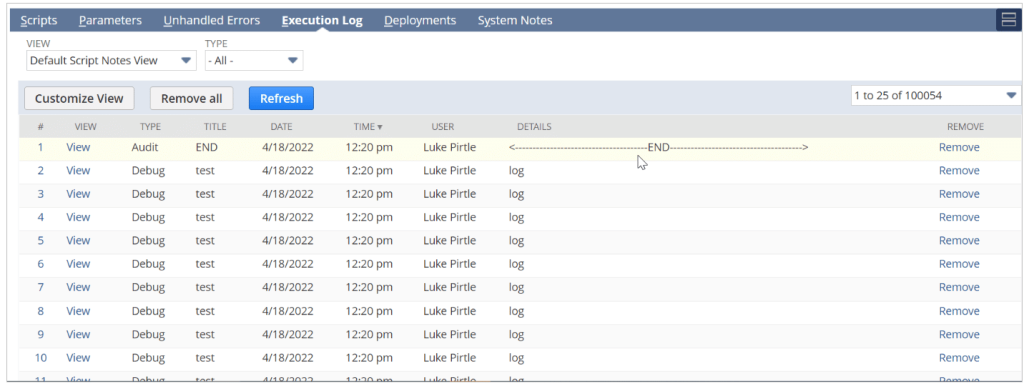

Now, watch when I add a single log into this operation:

Twelve whole seconds versus the 0.152 seconds because I added a single log to each iteration.

Javascript code is fast, network request and API calls are not—use this information as best you can. Don’t overcomplicate your JavaScript; if you want to optimize performance, you can have much more impact by removing unnecessary logs first.

The strategy should not be how to implement a hashmap or binary search into your code to make things lightning fast, it should be how to implement these things to make better quality code. These features aren’t taboo, but rather their misuse is. I do not have a pet peeve against complex code—I write a lot of crazy Map / Reduce scripts and have even used classes on more than one occasion. The difference is I used a class because the data structures were polymorphic and benefited from the approach. Code can be basic and complicated as long as the complexity is not driven from a misguided attempt at optimization.